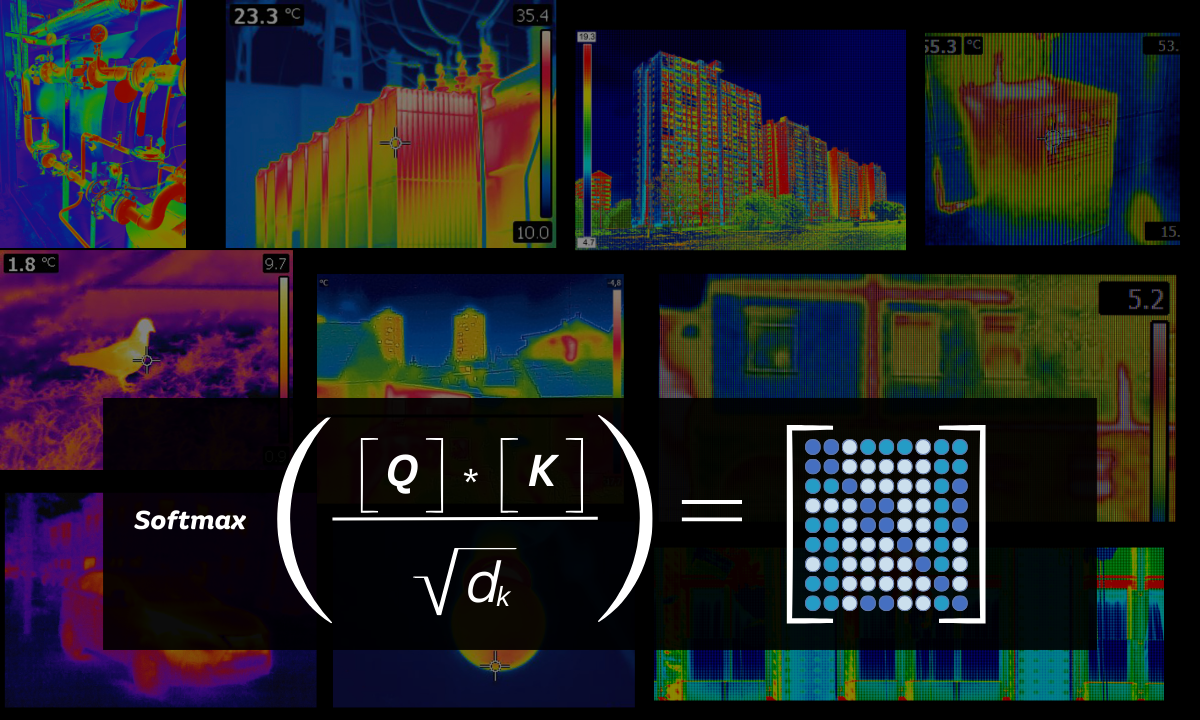

“Attention” Is All You Need

Introduced The Transformer, a neural network architecture relying solely on self-attention mechanisms. This innovation quickly became the dominant model for various sequence-to-sequence tasks, such as machine translation, text summarization, and…