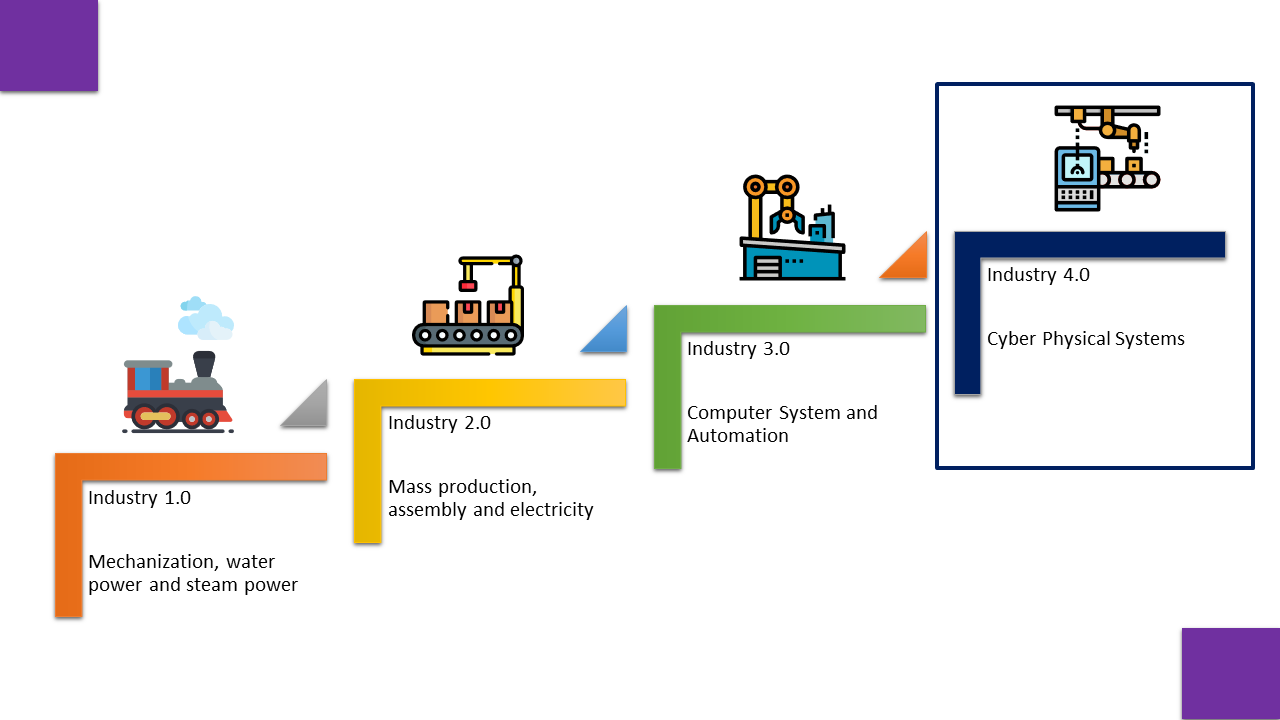

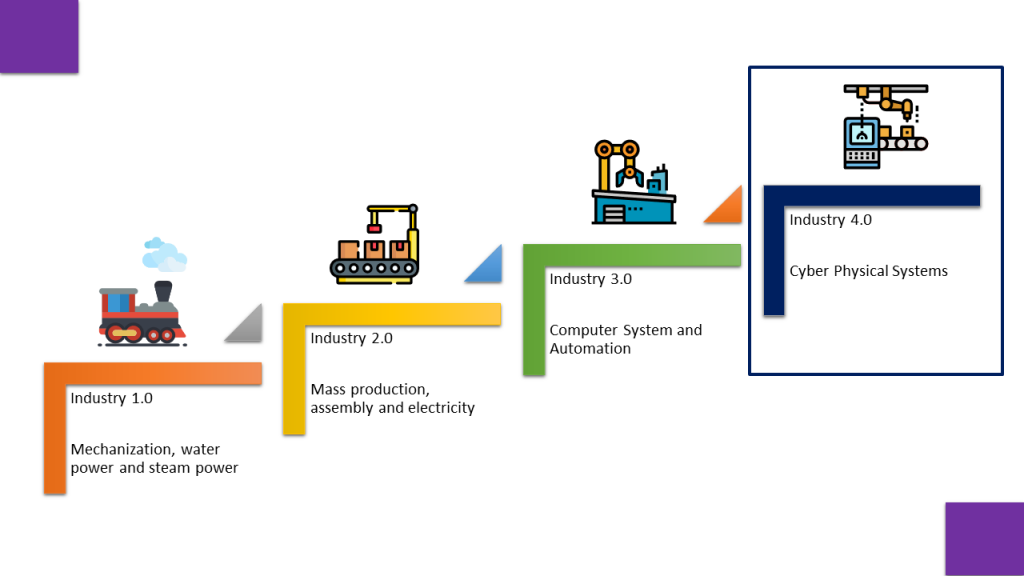

We are in the middle of 4th industrial Revolution, where data is the fuel to run innovations. Lets have a brief idea of all industrial revolutions happened in the history of technological evolution.

1st revolution was mechanical revolution happened around 1784, where machines were powered by water and steam, invention of steam engine and automation of textile manufacturing are the leading innovations of that time.

2nd revolution happened in year 1870, it was an electrical revolution, Ford’s assembly line is the most innovative development of that time.

3rd industry revolution happened in 1969, with the advent of micro-controllers and IT automated manufacturing, when the internet was launched.

Then 4th industrial revolution happened, which is distinguishable from the third one, because it is where humans meet the cyber world, This revolution resulting in smarter cities, science-fiction like robotics, edge computing and many more.

AI associated technologies have enormous potential to enhance our life as learners and workers. Researchers and developers are continuously improving AI solutions to mimic human behaviors in routines like problem solving, learning and language processing. Next generation AI will carry objectives that human think are essential for intelligent machines. Lets explore AI associated technologies of 2021.

Robotics is the field of AI which deals with designing and creating intelligent human like machines. AI and robotics duo is very powerful for automation tasks. Mobile robots with AI technology are already being used for commercial purpose, for tasks like cleaning offices and large spaces, carrying goods in factories, warehouses and hospitals, inventory management, working in environments which are too risky for humans.

Computer Vision emphasizes on the relationship between 3D world and its corresponding 2D image. It stresses upon the location and identity of objects. Due to increase of mobile mounted cameras, surveillance systems(Images and movies), computer vision has fast growing collection of useful applications in many domains. The applications of computer vision are divided into following sub fields: Image Classification, Object detection, Image segmentation, pose estimation etc.

Machine Learning is the field of study that gives computers the ability to learn without being explicitly programmed. On providing data to machine learning models, we get relevant predictions from data. Machine learning Is the subfield of AI which uses many techniques such as classification, regression and clustering to get meaningful results from data.

Robotic Process Automation does not involve any form of physical robots. It utilizes software robots which interact with software applications alike humans. It is designed to minimize the repetitive activity. AI enabled rpa softbots act as a knowledge-based dynamic system to operate beyond an automated cognitive system. In todays scenario the demand of virtual staff risen the need of these softbots.

Natural Language Processing (NLP) is a branch of Artificial Intelligence (AI) that studies how machines converse like humans. The Global Natural Language Processing (NLP) Market Size to Grow from USD 11.6 Billion in 2020 to USD 35.1 Billion by 2026, at a Compound Annual Growth Rate (CAGR) of 20.3% during the Forecast Period. The examples of NLP innovations of our time are Alexa, Siri, Cortana, IBM Watson, Grammarly etc.

After using 4G networks for data transfer, download, upload, voice and video calling, we are now eager to move to 5G networks, which supports low latency, very high speeds (e.g., eMBB), massive number of connected devices, and heterogenous mix of traffic types from diverse suite of applications. AI/ML is being used for 5G network planning, automation of network operations such as provisioning, optimization, fault prediction, security, fraud detection, network slicing, reducing operating costs, and improving both the quality of service and customer experience based on chatbots, recommender systems, and techniques such as robotic process automation (RPA).

The simple definition of IoT is to connect internet with any device having on and off facility. This includes everything from tv, washing machine, ac, lamp, fan , wearable devices, walking sticks for persons with disability, speakers , coffee machine and any thing we can think of. The analyst firm Gartner says that by 2020 there will be over 26 billion connected devices… That’s a lot of connections (some even estimate this number to be much higher, over 100 billion). IoT is a giant network of connected ‘beings’ be it things or people. It is the relationship between people-people, people-things, and things-things. Actually IoT allows endless virtual opportunities and connections to take place, many of which we can’t even think of or fully understand today. This will grow enormously with time.

To incorporate intelligence in a machine, we need large amount of data for teaching and training purpose, To handle such large volume of data, traditional data management tools are not helpful. Thus big data provides better management and handling of such large amount of data. AI algorithms uses that data to automate systems and make decisions by drawing meaningful patterns.

Cloud computing offers scalable resources, managed services, serverless architecture through internet, So we need to pay only for those resources we use. Examples of AI enabled cloud platforms are AWS, Azure and GCP. These platforms provide infrastructure to train machine learning models on large volume of data to generate meaningful predictions

Biometrics is the physical or behavioral characteristics of a person, that can be used for digitally identify that person to manage access controls. We use fingerprint, face , voice or retina locks in our cellphones million times during a day. AI help devices in understanding face, voice, gait, pose and other physical and behavioral modalities.

Augmented Reality (AR) is a perfect blend of the digital world and the physical elements to create an artificial environment. AR blends digital components into the real world. Whereas Virtual Reality (VR) is a computer-generated simulation of an alternate world or reality. It is used in 3D movies and video games.

Blockchain, sometimes referred to as Distributed Ledger Technology (DLT), makes the history of any digital asset unalterable and transparent through the use of decentralization and cryptographic hashing. A simple analogy for understanding blockchain technology is a Google Doc. When we create a document and share it with a group of people, the document is distributed instead of copied or transferred. This creates a decentralized distribution chain that gives everyone access to the document at the same time. No one is locked out awaiting changes from another party, while all modifications to the doc are being recorded in real-time, making changes completely transparent.

Rather than storing information using bits represented by 0s or 1s as conventional digital computers do, quantum computers use quantum bits, or qubits, to encode information, which are typically subatomic particles such as electrons or photons. Generating and managing qubits is a scientific and engineering challenge. Quantum computing and artificial intelligence are both transformational technologies and artificial intelligence is likely to require quantum computing to achieve significant progress in coming times.