Notice that there are a few metrics will be helpful when evaluating the performance of ML/DL models:

- False negatives and false positives are samples that were incorrectly classified

- True negatives and true positives are samples that were correctly classified

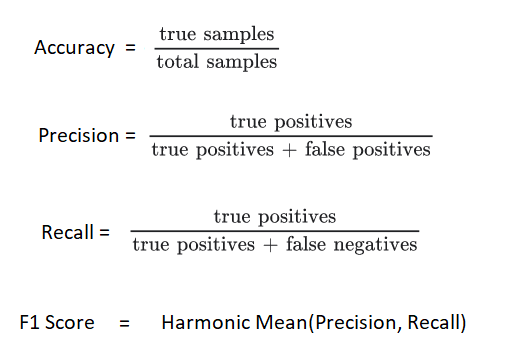

- Accuracy is the percentage of examples correctly classified >

- Precision is the percentage of predicted positives that were correctly classified >

- Recall is the percentage of actual positives that were correctly classified >

- AUC refers to the Area Under the Curve of a Receiver Operating Characteristic curve (ROC-AUC). This metric is equal to the probability that a classifier will rank a random positive sample higher than a random negative sample.

- AUPRC refers to Area Under the Curve of the Precision-Recall Curve. This metric computes precision-recall pairs for different probability thresholds.

import tensorflow as tf

from tensorflow import keras

METRICS = [

keras.metrics.TruePositives(name='tp'),

keras.metrics.FalsePositives(name='fp'),

keras.metrics.TrueNegatives(name='tn'),

keras.metrics.FalseNegatives(name='fn'),

keras.metrics.BinaryAccuracy(name='accuracy'),

keras.metrics.Precision(name='precision'),

keras.metrics.Recall(name='recall'),

keras.metrics.AUC(name='auc'),

keras.metrics.AUC(name='prc', curve='PR'), # precision-recall curve

]

Refer this example where Accuracy is not a right measure

https://www.tensorflow.org/tutorials/structured_data/imbalanced_data